Generating digital

music with DirectCsound & VMCI

Gabriel Maldonado

composer, guitarist, computer-music researcher

Fantalogica - Musica Verticale, Rome - Italy

e-mail: g.maldonado@agora.stm.it -

g.maldonado@tiscalinet.it

web-page: http://web.tiscalinet.it/G-Maldonado

Abstract

This paper concerns two

computer-music programs: DirectCsound, a real-time version of

the well-known sound-synthesis language Csound, and VMCI, a GUI program that

allow the user to control DirectCsound in real-time. DirectCsound allows a

total live control of the synthesis process. The aim of DirectCsound project is to give the user a powerful and

low-cost workstation in order to produce new sounds and new music

interactively, and to make live

performances with the computer. Try to imagine DirectCsound being a

universal musical instrument. VMCI (Virtual Midi Control Interface) is a

program which allows to send any kind of MIDI message by means of the mouse and

the alpha-numeric keyboard. It has been thought to be used together with

DirectCsound, but it can also be used to control any MIDI instrument. It

provides several panels with virtual sliders, virtual joysticks and

virtual-piano keyboard. The newer version of the program (VMCI Plus 2.0) allows

the user to change more than one parameter at the same time by means of the new

Hyper-Vectorial-Synthesis

control. VMCI supports seven-bit data as well as higher-resolution fourteen-bit

data, all supported by the newest versions of Csound.

1. DirectCsound

Csound [1] is one of the

most famous and used music synthesis languages in the world. The paradigm

Csound was initially thought with, was that of deferred-time synthesis

languages, such as MUSIC V; that philosophy remained essentially unchanged

since the beginning of ’60s.

Though such paradigm still

continues to satisfy a good deal of compositive needs even nowadays, and,

though this approach still keeps important advantages, it is impossible to

negate that it obstructs composers to have a direct and immediate contact with

the sonic matter.

In its original version,

Csound couldn’t be used in live performances, because the processing speed of

general-purpose computers of that age (we are speaking of ’80s) didn’t allow

real-time. Consequently, earlier versions of Csound (developed by Barry Vercoe

at the Massachusetts Institute of Technology in 1985) didn’t include any

live-control-oriented functionality at that time. At the beginning of ‘90s

Barry Vercoe [2] has added some MIDI oriented opcodes to use it in real-time.

At that time the only machines capable to run Csound in real-time, were the

Silicon Graphics and some other expensive UNIX workstations.

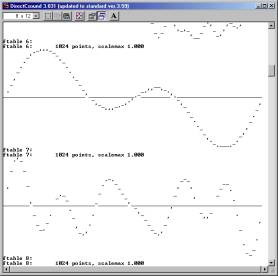

DirectCsound window

Nowadays Intel-based PCs

have become fast enough to run Csound in real-time. DirectCsound, a real-time

oriented version of Csound, fills a lot of gaps regarding the live control of

parameters and the missing of interactivity Csound initially suffered. In this

version many new features have been implemented, such as handling of MIDI

input/output (which makes Csound connected with external world) and the

reduction of latency delay, problems which didn’t appear solvable in first

real-time versions of Csound.

The new functionalities allow a total control of synthesis

parameters, which can be defined by the user in a very flexible way. This

flexibility, together with unlimited synthesis power, makes DirectCsound to

surpass any hardware MIDI synthesizer, in any field. Just some years ago,

having a workstation with that real-time synthesis processing power at home was

a dream. Such features were reserved only to machines costing hundreds of

thousands of dollars.

Being DirectCsound free, it

is sufficient having a cheap PC with audio card both to compose music

interactively and to make live

performances. It is now possible to think Csound is a universal musical

instrument.

1.1 Why using a sound synthesis language? Some words about

Csound.

The reader could now ask

himself why using a special language instead of a normal, general purpose programming language such as C, C++,

Java, Basic etcetera, in order to make music. The answer is: even if it is

always possible to use a general purpose language, a dedicated language is

easier to learn, easier to use, faster to program and, in most cases, more

efficient. The paradigm of making music with Csound implies writing two program

files, the first called “orchestra”, the second called “score” (even if now,

when using real-time it is possible to use only the orchestra file). By

processing these two file, Csound generates an audio file consisting of a

sequence of samples. This file can be heard later by using a sound player

program (such as the multimedia player in the case of Windows). About this

concept, Barry Vercoe [2][3] (the author of first versions of Csound) says:

“Realizing music by digital computer involves

synthesizing audio signals with discrete points or samples that are

representative of continuous waveforms. There are several ways of doing this,

each affording a different manner of control. Direct synthesis generates

waveforms by sampling a stored function representing a single cycle; additive

synthesis generates the many partials of a complex tone, each with its own

loudness envelope; subtractive synthesis begins with a complex tone and filters

it. Non-linear synthesis uses frequency modulation and waveshaping to give

simple signals complex characteristics, while sampling and storage of natural

sound allows it to be used at will. Since comprehensive moment-by-moment

specification of sound can be tedious, control is gained in two ways:

1)from the instruments in an orchestra, and

2)from the events within a score.

An orchestra is really a computer program that can produce sound, while a score is a body of data which that program can react to. Whether a rise-time characteristic is a fixed constant in an instrument, or a variable of each note in the score, depends on how the user wants to control it. The instruments in a Csound orchestra are defined in a simple syntax that invokes complex audio processing routines. A score passed to this orchestra contains numerically coded pitch and control information, in standard numeric score format [....]

The orchestra file is a set of

instruments that tell the computer how to synthesize sound; the score file tells the computer when.

An instrument is a collection of modular statements which either generate or

modify a signal; signals are represented by symbols, which can be

"patched" from one module to another. For example, the following two

statements will generate a 440 Hz sine tone and send it to an output channel:

asig oscil 10000, 440, 1

out asig

In general, an orchestra statement in Csound consists of an action symbol followed by a set of input variables and preceded by a result symbol. Its action is to process the inputs and deposit the result where told. The meaning of the input variables depends on the action requested. The 10000 above is interpreted as an amplitude value because it occupies the first input slot of an oscil unit (this kind of units are also called “opcodes”); 440 signifies a frequency in Hertz because that is how an oscil unit interprets its second input argument; the waveform number is taken to point indirectly to a stored function table, and before we invoke this instrument in a score we must fill function table number 1 with some waveform.”

Actually, Csound

sound-synthesis approach can be compared to connecting signal generator modules

when using a hardware modular synthesizer. Modules are replaced by keywords,

cables are replaced by variables representing signals. The score file of Csound

has a numeric format. The following example explains it (it doesn’t refer to

the previous orchestra example, but to a more complex one):

;Score

example

;a sine wave function table

f 1 0 256 10 1

; some notes

i 1 0 0.5 0 8.01

i 1 0.5 0.5 0 8.03

i 1 1.0 0.5 0 8.06

i 1 1.5 0.5 0 8.08

i 1 2.0 0.5 0 8.10

e

Each line of the previous

score begins with a letter representing a statement (f statement creates a table and fills it with a function, i statement indicates a note,

semicolons starts a comment up to the end of line of text). Notice that each

statement is followed by numbers separated by spaces, corresponding to sound

parameters, called p-fields. In the i

statement, the first parameter expresses the instrument identifier (an

orchestra file can contain several instruments, each one is identified with a

number; each instrument can synthesize sound in a different way); the second

number expresses time start of corresponding note, the third number the

duration of the note, and the following numbers represent parameters peculiar

to each instrument.

Notice that this approach is

different than making computer music with MIDI instruments and MIDI sequencers

(even if Csound can handle MIDI), because the composer can define not only the

notes, but also the quality and timbre of sound. It is possible to

inter-operate at any level of detail, as all levels can be mixed with each

others, to synthesize and make the computer to generate both the sound and the

musical texture and structure. When using Csound the “generative art” term is

always appropriate.

1.2 Why real-time?

What

is the difference in using Csound in real-time rather than using it in

deferred-time? For what reason should a composer prefer to use it in real-time?

The more trivial reason is that running Csound redirecting its output to the

audio-DACs (instead of writing the output on a file) saves both space on the

hard-disk and time to listen to the result; it can be quite useful when

composing a piece, because normally a lot of tests are necessary to achieve the

composer expectations. In this case real-time is not essential, it is only more

convenient.

But

there are cases in which real-time is indispensable. What about if a composer decides

to make a piece in which some musical parameters are fixed, but other ones are

modifiable during the performance? And if he wants to control a MIDI

synthesiser by means of his favourite Csound algorithm or pitch tables? And if

a performer needs a synthesiser capable of completely new synthesis methods,

which aren’t implemented in any hardware synths yet? Real-time becomes also

necessary when some non-intuitive parameters of the composition need to be

defined accurately by means of the composer’s ears.

1.3 Four different ways to use Csound in real-time

The first way can be

considered as the most trivial one, since no MIDI opcodes are used in this

case, and sound output is identical to that of the wave-file produced by

running a deferred-time Csound session with the same orchestra/score pair. This

way can be useful to reduce the waiting time of wave-file processing and to

eliminate the necessity of a big hard-disk storage space, but in every respect

this method is identical to deferred-time by the hearing point of view.

The second way is the

opposite to the first. According to this way, it is possible to consider Csound

as a MIDI synthesiser (the most powerful synth in the world!). This synth can

be connected to a MIDI master-keyboard for a piano-like performance;

furthermore it is possible to play Csound with controllers different from a

piano keyboard, such as wind controllers, guitar controllers, drum controllers

etc. In this case no p-fields are present in the score. Each note is activated

by a MIDI note-on message and deactivated by a note-off message. Also MIDI

control-change messages are recognized

by DirectCsound and can be assigned to any synthesis parameter. It is possible

to use a MIDI mixer or the VMCI program (see below for more information on it)

to control a controllers bank. It is possible to use VMCI as a synth-editor for

Csound by designing an instrument with a big number of controller opcodes, each

one defining a particular patch-parameter. For example, the amplitude envelope

ADSR durations and levels of an instrument can be assigned to a bank of

sliders. So a Csound instrument can be considered as the type of

synth-algorithm used, in which each slider configuration (when using VMCI this

configuration can be saved on disk) becomes the particular synth-patch that can

be edited according to user taste.

Continuous parameters can be modified via MIDI by gestural actions of

the performer by means of devices such as control-sliders, modulation-wheels,

breath-controls, pitch-wheels, aftertouch etc. In this case no note is defined

in the score, because all notes are activated in real-time by the performer

The third way to use Csound

in real-time is joining score-oriented instruments to some parameters which are

modifiable in real-time by a MIDI controller during the score performance. In

this case each note has its p-fields already defined in the score, but there

can be some additional parameter that can be modified at performance time. The

action-time and the duration of each note are fixed, but the metronomic speed

of the performance can be changed in real-time by the user. Any kind of

parameter can be assigned to a live controller. This can enrich the concert

performances: each time the composition will acquire a new flavour, it will

never be exactly the same.

The fourth way to use Csound

reminds us a little the old and cheap Casio keyboards "one-key-play"

mode. In this mode, note-on messages are used to trigger the note-events, that

are pulled out from a queue of note-parameter blocks stored into a table, i.e.

all the parameters of each note have to be stored into a table. Note-off

messages are used to deactivate a playing note. Overlapping notes, as well as

polyphony are allowed. All the parameters used in a conventional score-oriented

instrument can be ported to a real-time activated instrument when using this

method. I like to play Csound in this way very much, because it is possible to

define a lot of very precise parameters for each note, but the activation time

and the duration of each note is decided by the performer at performance time.

This enables the user to control all these interpretative nuances of time such

as ritardando and accelerando, very difficult to define with precision at the

score-design time. Also, two additional values can be used to control any kind

of parameter; these values are obtained by the note-number and the velocity of

each note played at performance time. A section of a score-oriented instrument

of a standard score can be easily converted to be used with the "one-key-play"

mode.

2. Space in Music: Music Space, Spatial Sound and Sonic

Space

In this section, before

proceeding with the treatment of VMCI, we must clarify the concept of space applied to music. In fact we can find

several meanings of the space music relationship.

The first and more intuitive

meaning can be the sound displacement in real three-dimensional space.

Evolution has given to Man a very precise recognition capability of the

displacement of a sound source. Another capability (concerning the same type of

space) is the recognition of the size and the shape of the environment a sound

source is placed in. Both sound source displacement with respect to a listener

and environment properties (such as reflection, size, air absorption etc.) are

covered by new features of DirectCsound, enabling a musician to deal with

multi-channel surround sound. When dealing with music, I name this kind of

stuff “sound spatialization” or “spatial sound”.

Another meaning of space is

the compositional space. This is an imaginary space concerning the structure of

a music composition. In fact the structure of a composition can have several

levels of organization, starting from

the micro to the macro. This kind of stuff can be finely analyzed with a

set-theory-like approach. Actually, this is the model used by most

musicologists and music-analysts. In this case time can be treated as a spatial

dimension. Traditional western music-writing system uses the concept of “note”

to deal with this kind of space (the pentagram can be considered as a sort of

Cartesian geometric plane representing time in the horizontal dimension, pitch

in the vertical). However, as we will see later, western musical notation runs

into a lot difficulties when dealing with a compositional space of more than two

dimensions (pitch-time). I call this

space “music space”, and, when making digital music, this concept it is

somewhat linked with the next meaning of the space music relationship.

The third meaning is the sound parameter space. Even

if a sound signal can be completely represented into a three-dimensional

Cartesian space expressing the frequency-time-amplitude quantities,

nevertheless sound generation can involve a huge number parameters. However,

because of practical reasons, in synthetic sounds this number must be a finite

number. In order to obtain interesting sounds, a lot of synthesis parameters

that can be controlled independently and at the same time are needed. Csound

language can virtually handle any number of parameters, by means of its orchestra-score

philosophy. In Csound, each note can, and at the same time can’t be comparable

with a note of a traditional score. In fact, besides duration, amplitude and

pitch, each note can be activated with a high number of further synthesis

parameters, depending by how corresponding orchestra instrument is implemented.

Orchestra-score approach in Csound is deferred-time oriented.

When using Csound in

real-time, as each note is triggered by MIDI, only three parameters can be

passed at a time (instrument number, note number and velocity). So, to control

further parameters, it is necessary to use MIDI control change messages. A

single MIDI port can handle up to 16 channels, and each channel can handle up

to 128 different controllers, each one generating a range of 128 values. So, in

theory, it is possible to use a maximum of 2048 different variant parameters in

real-time with the MIDI protocol (16 x 128 = 2048).

An instrument containing,

for example, 20 different controllable parameters can be imagined as immersed

into a space with 20 dimensions. I name this kind of space “sonic space”. The problem is how a single performer can

control such massive amount of parameters, in the case of complex instruments.

VMCI Plus, a program whose purpose is controlling DirectCsound in real-time,

gives the answer, proposing two further kinds of spaces: user-pointer-motion space and

sonic-variant-parameter

space. See the “Considerations about

the multi-dimensionality of the hyper-vectorial synthesis” section below

for more information about these spaces.

3. The Virtual Midi

Control Interface (VMCI Plus program)

Computer mouse and standard

hardware MIDI controllers (such as master-keyboards and fader consoles) allow

the user to modify only few parameters at a time; so changing more than one of

them in parallel (in the case of sounds with many variant parameters) is not by

any means an easy task. Furthermore hardware MIDI fader banks rarely allow the

user to save and restore the exact configuration of each fader position, which

is a very important feature when developing a timbre of a certain degree of

complexity, as a Csound instrument can require dozens of variant parameters,

and each parameter can be adjustable by the user.

It is important to

understand that each instrument of a Csound orchestra can be compared more to a

hardware synthesizer rather than to a synthesizer patch. In fact, the timbre of

a note produced by a Csound instrument can be very different from that of

another note produced by the same instrument, according to the p-fields values

(when working in deferred-time), or according to the MIDI controller messages

sent to Csound by a performer playing a real-time MIDI session.

So, a hardware synthesizer

patch can be compared to the configuration of the changeable parameters of a

Csound instrument. If the musician finds a good timbre while adjusting these

parameters manually, changing them in real-time, then he would expect to

restore all of his previous work when he starts a new Csound session.

For this reason I think that

computer programs dealing with these kinds of problems are very handy when

working in real-time with Csound.

VMCI is intended to

accomplish two tasks:

· implementing

timbres (starting by a MIDI controlled Csound instrument)

· varying timbres

during real-time performances

There are two versions of

VMCI, the 1.2 (freeware) and the 2.0 (named VMCI Plus, shareware). The latter

provides the Hyper-vectorial synthesis panel, which can be used to

change hundreds of parameters with a single mouse movement.

3.1 Activation Bar, Slider Panels and Joystick

Panels

In this section we deal with VMCI Plus version 2

(shareware). Some of the information dealt here is still valid for the earlier

freeware version, although there are many extra features.

The VMCI program consists of several windows. When VMCI

starts, the activation bar appears.

The activation bar is made to create the other windows, to set-up the MIDI port

and other Csound-related features, to save and load setup-files (VMCI setups

are files including all of the user’s configuration parameters), to show/hide

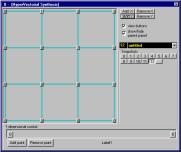

existing windows, and to activate/deactivate some program actions (Fig.1).

fig.1: the activation

bar

The activation bar provides several buttons whose functions

are: starting Csound, opening the orchestra and score file and editing them,

opening and clearing the copy-board (a

text area used to store some useful textual information concerning current

slider configuration), and to accomplish some other activities.

Also, VMCI provides several

classes of panels related to the modification of MIDI controller data:

· slider panels

(fig.2)

· joystick panels

(fig.3)

· keyboard panel

(fig.4)

fig.2: 7 bit slider panel

Slider panels and joystick panels allow the user to create a

new window, the Hyper-Vectorial panel (fig.5).

Slider panels contain a

variable number of sliders (i.e. scroll-bars) that can be defined by the user.

fig.3: 14-bit joystick panel

Each slider is associated

with a MIDI control-change message, so a stream of messages is sent to the MIDI

OUT port (that can be defined by the user) when the user modify the

corresponding slider position.

fig.4: virtual keyboard panel

Up to 64 sliders can be

visualized in a single panel at a time, but the visible sliders are only a part

of the total number of controllers that can be handled by each panel. In fact,

each panel can be linked to up to 2048 control-change messages (128 control

numbers x 16 MIDI channels).

Joystick panels are similar

to slider panels, but with joystick panels the user can modify two parameters

with a single mouse movement: the horizontal and vertical axis of a joystick

area can be associated with any control change message of any MIDI channel as

well can the sliders in the case of

slider panels. It is possible to switch a slider panel, turning it directly

into a joystick panel, by means of a single mouse click, in this case the

current positions of each slider are instantly converted according to the

positions of the lines of the corresponding joystick areas, as no information

of the current parameter configuration is lost.

There are two kinds of

panels: low resolution panels, with seven-bit data and high

resolution panels with fourteen-bit data (only seven bits are used in

each byte of MIDI data). Each slider of high resolution panels sends a couple

of MIDI control messages, the first corresponding to the most-significant byte,

the second to the less- significant one.

In Csound there are some

opcodes that can handle these couple of messages in the correct way, interpreting

them as a single fourteen-bit number and scaling the opcode output within a

minimum-maximum range defined by the user. The minimum-maximum values can be

defined in the VMCI panels too, so the user can view the actual scaled value of

each sent MIDI message as interpreted by Csound (the scaled value is shown

within a text area whose text can be copied and pasted to another file for any

purpose).

When the user reaches an

interesting slider configuration, he can store it inside a ‘snapshot’. Up to 128 snapshots can be contained

in a single panel. Each snapshot can be instantly recalled by a mouse click; in

this case all the control messages corresponding to each slider position are

instantly sent to the MIDI out port. Each snapshot can be associated with a

text string defining its name, so a panel can be considered also as a “bank of

patches”, in which each patch corresponds to a snapshot. When a VMCI setup file

is saved to the hard-disk, all the snapshots are saved as well and can be

restored when loading that file again.

3.2 Hyper-vectorial synthesis

Each one of slider or

joystick panels can create another panel, called “hyper-vectorial synthesis

panel”; the user can open or close it

by clicking a special button placed in the parent panel. Hyper-vectorial

synthesis panels allow the user to vary many parameters at the same time, with

a single mouse movement.

fig.5: vectorial synthesis

according to Korg

I called this kind of action

“hyper-vectorial synthesis”, deriving its name from the vectorial synthesis

used in some MIDI synthesizers such as the Korg Wavestation. The “vectorial” term is referred to a path

traced by a joystick in a squared area which is bounded by four points

(A-B-C-D), each one representing a different sonic configuration (see fig.4). (With the term “sonic configuration”, I mean a set of

synthesis parameter values that produce a determinate timbre). When the

joystick path touches one of these points, the resultant timbre is the sonic

configuration set for that point. When the joystick path covers any other

location of the plane, a sound corresponding to a scaled combination of the

timbres of the four points A-B-C-D is generated; actually, this sound is the

resultant of the instant distance of the path from each one of A-B-C-D points.

fig.6: VMCI hyper-vectorial

synthesis panel

In the case of Korg

Wavestation, the vectorial synthesis is generated by means of a simple

amplitude-crossfading of four pre-generated sounds (corresponding to the

A-B-C-D points); in this case the only parameters to be modulated are the

amplitudes of the four sounds themselves.

In VMCI Plus this concept is

hugely extended. First, a vectorial area which can contain more than four

sonic-configuration points is introduced (see fig.6). I called these points “breakpoints”.

Second, each breakpoint corresponds

to a snapshot containing all slider

positions of a panel. Consequently, each breakpoint contains the values of many

different parameter values (in theory up to 2048 seven-bit values, 1024 when

using fourteen-bit data); each one of the controller positions contained in a

breakpoint can be related to any kind of synthesis parameter (not only

amplitude-crossfading as in the case of Korg) according to the corresponding

Csound instrument. So a massive amount of synthesis parameters can be varied by

means of a single mouse-drag action; the only limits are computer processing

speed and MIDI transfer bandwidth.

4. Considerations about the

multi-dimensionality of the hyper-vectorial synthesis

If we consider a variant

sound-synthesis parameter as a dimension of an N-dimensional space, we can

consider a synthesized timbre as a determinate punctiform location of that

space. If a synthesized sound changes its timbre continuously, we can compare

that sound to a point moving inside the corresponding N-dimensional space. The

number of dimensions of that space is determined by the number of variant

synthesis parameters. For example, a note generated by a Csound instrument, in

which there are only two user-variant parameters (for example pitch and

amplitude), can be considered as a point of a two-dimensional space, i.e. a

point of a plane area.

Until now, the configuration

of most western music is based on a two-dimensional plane area, because only the

pitch and the time displacement of each sound event can be written in a

standard music score (amplitude of notes can be considered as a third

dimension, but standard western music notation system doesn’t allow to define

this parameter with precision, so it is often quite aleatory and practically

left to the performer’s taste).

Computer music opens the

possibility to compose with any number of variant parameters, and in particular

Csound provides two ways to work with them: [1] discrete initialization-parameters

(for example standard score p-fields) and [2] continuous-parameters, that can

be driven by mathematical functions or by real-time user gestures transferred

to Csound by means of MIDI or other protocols (for example, MIDI slider

consoles, mouse or graphic tablets). In the present version of VMCI, it is

possible to use the mouse to move a point inside a sonic-space of up to 2048

dimensions (obviously this is a theoretical number, actual MIDI bandwidth

limits this value). Apparently, the

motion of that point is somehow bounded

(by the previously-defined configurations of the breakpoints), but as

breakpoint configurations are defined by the composer himself, this bond can’t

be considered a limit, but a compositional feature.

At this point I must clarify

a concept: there are two distinct kinds of spaces we deal with using VMCI:

· user-pointer-motion space

· sonic-variant-parameter space

User-pointer-motion

space is the space in which the user moves the mouse pointer (covering its

path). In the VMCI hyper-vectorial synthesis panel there are two areas related

to that kind of space: a two-dimensional area and a one-dimensional area. The

user can add up to 128 breakpoints in each of these two areas (the actual limit

is screen resolution). The next versions will support three-dimensional

user-pointer-motion spaces (see next section).

Sonic-variant-parameter

space is a theoretical space in which each variant parameter of the

synthesized sound represents a dimension. For example, in the case of a Csound

instrument which takes eight p-fields from the score (besides the action-time

and duration of each note), this space has eight dimensions. In the same way,

in the case of a Csound instrument which contains, for example, eleven opcodes

that return MIDI controller positions, the corresponding timbre can be

considered as contained in an eleven-dimensional space.

A user-pointer-motion space

can be considered also as a projection

or a section of a

sonic-variant-parameter space, because the latter normally has a greater number

of dimensions.

5. Future development

Present version (VMCI Plus 2.0) implements hyper-vectorial

synthesis in two user-pointer-motion areas : uni-dimensional and

two-dimensional mouse areas. Next versions of VMCI will implement hardware

graphic tablet and joystick support in order to provide a tactile device to

control hyper-vectorial synthesis. Also, the next versions will allow to

control it by means of external

hardware MIDI devices and virtual reality devices. V.R. devices will add the possibility to move the user pointer

in a three-dimensional space.

Higher resolution slider

panels are expected in next versions too, as at present time Csound can handle

up to 21-bit MIDI control data (consisting of three 7-bit bytes).

Also some network protocols will

be supported as an alternative to MIDI. This will allow to send floating-point

data and a will have a higher bandwidth than MIDI.

6. How to get the program

Freeware and shareware versions of

VMCI, as well as DirectCsound can be downloaded from my site:

http://web.tiscalinet.it/G-Maldonado

A copy of both programs is

placed into the CD-ROM of “The Csound

Book” [4] and “The Virtual Sound” [5].

Interested people can also

contact me directly at the following email addresses:

g.maldonado@agora.stm.it

g.maldonado@tiscalinet.it

References

[1] The Csound Front Page -

http://www.csound.org

[2] Vercoe, Barry -

http://sound.media.mit.edu/people/bv

[3] Vercoe, Barry “The Csound Manual” -

http://www.geocities.com/Vienna/Studio/5746

[4] “The Csound Book”

(edited by Richard Boulanger), MIT Press - USA 1999

[5] Bianchini R. - Cipriani

A. “The Virtual Sound” - ed. Contempo

- Italy 1999