Agent Visualization

Chiron

Mottram, Ba, Msc

Bartlett

School of Graduate Studies, University College London, U.K.

e-mail:

c.mottram@ucl.ac.uk

Abstract

This paper is about software we are developing for an artwork come

performance piece, which brings dancers, computer generated agents and an

audience together in the same space.

The software negotiates the behaviours of dancers and the agents,

adding a further layer of visualization to the performance as users learn to

evade, engage, block or trap the agents. Thus experienced dancers would produce

predictable reproducible visual effects while novices would produce random

chaotic effects.

The agents use a visual field

to interact with each other and the environment and have a mixture of adaptive

and programmable behaviour which can be set and reloaded, including origins and

destinations.

Secondary, but potentially

more visible we introduce a particle field moving in a virtual space using the

trails left by the agents. This has a number of parameters which affect the

persistence and or attractiveness or otherwise of the agents trails to the

particles, which run on two algorithms.

The first looks at the

gradient of the virtual space, which is composed from the alpha channel of a

texture altered by the agents, and the blue channel of the camera input, the

particles life, direction and velocity are thus affected.

The particles can also set

the gradient, so particles can group together to manage their own persistence.

As such, this is similar to

the "Game of Life" algorithms and provides us with ample room for

emergent phenomena.

The other algorithm is a

simple pressure model which uses an advection and dispersal equation on all the

particles, this can be used to produce "atmospheric" effects, though

this algorithm works at the global level whereas the first algorithm works at a

local level, the effects can be made to contradict each other.

All parameters can be set and

saved and can be rerun in timed sequences, to enable a choreography to be

developed in collaboration with dancers and musicians.

OpenGL Shader language has allowed the

visualization and mixing of images to occur in real time to a high definition.

1. Introduction

The original brief for this piece was to devise a piece of art derived from work which had done here previously with “visual agents” [1], these agents have a limited intelligence and a programmable adaptable movement with immediate response to visual stimulation, provided using input from a live camera feed or as in [1] from sensors attached to objects.

There are three considerations within this brief, firstly to represent the agents, secondly to show them responding to the dancers, and thirdly to allow the system to evolve dependent on the behavior of the dancers and the agents.

The original inspiration was for a complex surface which moved in response to the movement of intelligent agents and dancers, thus an agent walking on the surface would raise or lower the surface at that place, pulling the area around smoothly, this could also be applied to the dancers movement, so that the whole surface became like a live Kohonen net.

As a secondary consideration a particle system was imagined, which could express an intermediate state between the two forms, or maybe as an expression of something else entirely, such as the atmosphere or mood of a section.

Whilst working on the particles it became apparent that here was something that was interesting in its own right, that the complexity of interaction and possibilities for aesthetic output was very large, that this was effectively a new palette for the artist or performer which enables the swift creation of interactive effect.

From my own experience as an artist I was reminded of the many different ways painters have attempted to simulate the effects of water and atmosphere, J.M.W Turner being an excellent example of a painter to whom the movement of water, clouds and light were central to his work. I have begun to imagine the possibility that it might be possible to produce the live interactive version of some of the effects explored in the painting, the clouds that move, the smoke which curls and the sea which swells…

.

2. Method

2.1 Background

The original agents program as in [1] uses a simple texture to represent the world the agents live in, their vision uses a simple line drawing algorithm which has been altered to pick up, rather than put down, the colour of the individual pixels. A random sample of these lines is taken within the agents’ field of view, and the agents choose their direction based on this information. A number of parameters can be set to control, how far the agent looks or can look, the number of samples, the field of view, and the boundaries to the agents movement. This is done through a dialogue which is beyond this papers scope. When we use a camera, this texture is dynamically updated with the image from the camera.

2.2

Main implementation

The focus of everything in this paper is a single texture 1024x512 in size on which we create our effects. The multiple of 512 is because OpenGL textures are of this order. OpenGL was introduced to allow us to do certain parts of the calculation on the Graphics Card which has greatly increased the quality of the output. The 2:1 aspect ratio is good for projecting the final image within a studio or performance space.

These are fairly arbitrary, but are a useful device to reduce the complexity which is inherent in accepting input from different resolution cameras and outputting to different size windows.

We do one conversion on the incoming signal, to either reduce resolution or to stretch it, and then allow no resizing of the window. This texture then is an array of 1024x512 32 bit values, each 32 bit value is divided into 8 bits red, 8 bits green, 8 bits blue and 8 bits which normally store transparency but here we use to store the altitude of the surface. This altitude is very important as the variations in the altitude determine the movement of the particles, as such the 8 bits only allows 255 different altitudes, for the moment this has been ample. As it stands there are three different ways that the altitude of each pixel of the surface can be altered. There are then also different ways that the movement of the particles can be altered. To this end we use a dialogue which allows us to set all the different parameters and the different scalar factor we use to combine the different methods. Also this us allows us to set the number, life and visual appearance of the particles, as well as how this is all combined with the input from the camera. All these parameters can be saved and re-loaded, the program allows these files to be loaded sequentially for a timed period from a text file which has the name of the file and the duration on consecutive lines.

A number of variables and checkboxes from a dialogue are used in the program with the following effects. The name of the variable is written as it appears on the dialogue in parenthesis.

2.2.1 Methods

of altering altitude

2.2.1.1

Agents movement

The agent position is loaded into this module as a source of particles, also the altitude can be set using the “Agent footprint” variable, by default this sets the altitude to an absolute value if the “abs/-“ checkbox is checked this becomes an added value. The “Size” box allows us to set the size of the affected area

2.2.1.2

Particles

The “Particle footprint” variable, by default allows us to say how much the particle will alter the altitude of the surface at the particles location the “-/abs” sets the altitude to an absolute value. The “Negative” checkbox reverses the sign.

2.2.1.3

Convolution or Filter

The “Convolute” variable, defines how the altitude and colour of an individual pixel is altered when compared to its vertical and horizontal neighbours.

This averages the four surrounding pixels then adds the present pixel value using this value as a weight. The result is then combined with the value in “Background 0-255” using the weight as supplied in the “Background (0-1.0)”. The background numbers then supply the method by which the surface tries to return to its original shape, while the filter smoothes the image, blurs the edges and provides the method by which holding down one part of the surface will slowly spread its effect with multiple iterations. This calculation is done on the GPU ( Graphics Processing Unit) along with the methods which add the camera image to the texture, this saves significant time, allowing approximately ten times more particles than would normally be possible.

2.2.2 Methods

of altering movement

The movement of the particle by three different agencies, the camera image and the altitude measure operate on pixel adjacency measure, while the pressure map operates over a larger scale defined by a grid size which is about 20 pixels.

The particle has an initial speed and direction when it is emitted from the agents’ location, which is set in the dialogue.

2.2.2.1

Altitude

This compares the altitude of the current pixel to the altitudes in front, behind, to the left and to the right. In other words the adjacent pixel values are compared to the value to the current position of the particle to calculate the acceleration and turn of the particle. We then use the parameters “Speed up”, “Slow down”, “Turn left” and “Turn right” to alter the direction and speed of the particle depending on the relative weighted values of the altitudes.

2.2.2.2

Camera Image

If the “Add Image” check box is clicked the “blue” channel of the image is subtracted from the altitude of the surface. If the “Positively” box is checked the value is added. This gives a direct connection between the camera image and the particles. Thus it could allow a performer to have an immediate affect on the particles, as well as their control of the position of the agents who are the source of the particles. This might be very useful where the particles have long lives.

2.2.2.3

Pressure Map

If the “Use Pressure Map” check box is clicked the pressure map values are added to the altitude of the surface. The Pressure map is similar to the Altitude calculation except it happens on a larger scale. The whole texture is divided into a grid, each square being set at 20x20 pixels, when a particle is in a grid square the particles’ velocity and direction are used to set, with all the other particles, the velocity and direction of the grid square. Thus, when a particle wants to calculate its new trajectory it looks at the grid squares in front, behind, to the left and to the right of the grid square it’s currently within. The new direction is set proportional to the values in the different grid squares, weighted by the distance of the particle to the grid square and the current velocity and direction of the particle, this mimics some features of conventional CFD without ever getting into the computational complexity of such calculations. If 1.0 is entered in both edit boxes the velocity and direction are unaffected, so 0.0 in “Speed” and “Bend” is the maximum value. The “Attract” button reverses the behaviour so the particles are attracted to each other.

2.2.3 Life

of Particles

The life of the particle is defined by the “limit p” variable, the “Life” variable and the “Restart < v” variable. The last of these is an absolute value, if the velocity of a particle is less than the number in the dialogue the particle is restarted. The first two values are combined; if the altitude of a particle is less than the number in the “Life” variable then the particle loses a life up to the number in the “limit p” variable. This supplies the mechanism whereby particles will continue to live as long as the altitude of the surface is above a certain value which could be determined by any of the factors which adjust the altitude ie other particles, the agent trail or the convolute function combined with values set for the Background.

2.2.4 Visual

Display

The colour of the particles can be set with “Colour” “Fixed” and “Random” variables which set the colour of particles emitted at each agent location using a fixed and random component.If the “map” check box is used then these values are applied to the particles directly. The “prop speed”, “prop life” and “prop altitude” check boxes alter the brightness of the particles dependent on their velocity, their life left and the altitude of the surface they are on these can be inverted using the “prop invert” box.

2.2.5 Camera

input

The variables “Mix Camera” and “Threshold” and their associated check boxes control the way we combine the camera image to our output, we can look at the camera image texels and see if the blue value is above a certain threshold, and then combine it with the image in the proportions defined in the “Mix Camera” variable. A further procedure takes the default value which controls the agent movement and shows everything which is visible to the agents, this is activated by just using the “Mix” checkbox.

2.2.6 Number

of particles

By default the program starts with only a thousand or so particles, the Pressure Map is the main limitation on the number of particles, with this unchecked the limit seems to around 10,000 on a 2.8 GHz Xeon processor. If the number in “Number of Particles” is changed all the particles are restarted, this is useful if you have set particles which do not die, and need to reset them.

3. Results

Here I shall try to provide a brief resume of the main classes of visual effects and how they are obtained using the rules as they have been described, in some cases it is possible to extrapolate other possible visual effects or interpolate the others contained within, or in combination with others.

3.1.

Using Altitude

This takes account of the ability of the particles to keep each other alive as they erode a path through the surface, this path is constantly changing, in much the same way as the path of the a river changes on a flood plain.

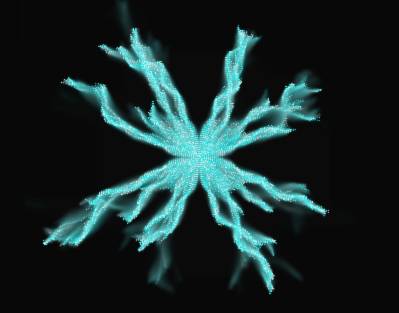

Fig 1

In Fig 1 we can see the particles emanating from a central point dividing themselves out into a number of different channels, the fainter marks show the remains of old routes.

The opposite effect is seen in Fig 2 where the particles are given a negative bend in respect to each other.

Fig 2

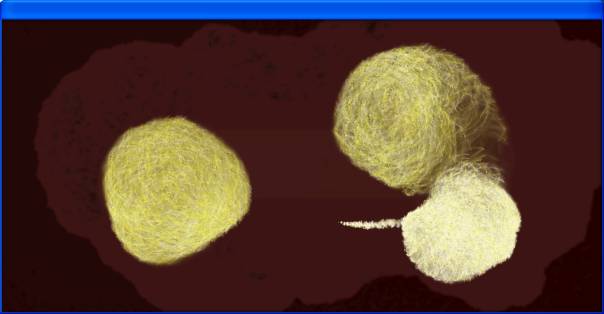

This can be changed into a spiral by making the left and right bend unequal, but more interestingly we can make free floating balls when we have one negative and one positive as seen in Fig 3, here, a group of particles are keeping themselves alive by forming a ball

Fig 3

Several in fact, here also we are using the “prop altitude” feature which lightens or darkens the colour of the particle dependent on the altitude of the underlying surface.

Generally we have to very careful in setting parameters for keeping a group alive, it is very dependent on the time scale we wish to convey, for a slow moving example as in Fig 3 the balls decay over a period of a couple of minutes, but it is possible to conceive of accidental stable situations, which would last a good deal longer. The above situation was surprising, as one of parameters would not normally help the group to survive, i.e. the negative turn.

In fact it just meant that all the particles going the wrong way around were eliminated and we were left with all the particles which were turning right i.e. going around the centre of the group in a clockwise direction, which gave the appearance of a rotating ball of string.

3.2.

Camera effects

To illustrate this, the following image was produced particles beginning to find the edges of the shelves and other areas of lightness in the image.

Fig 4

I have found this feature best when the image is still and then moves, as the particles “take up residence” in the lighter areas of the image, and when movement occurs a great number are released creating a blurring affect until they find new areas of light to exist within.

3.3.

Pressure effects

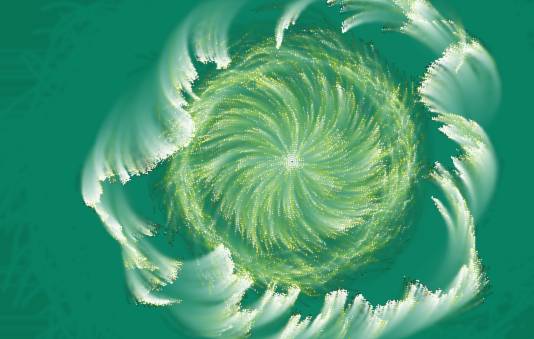

Though this would be best illustrated with a number of images, suffice to say it is possible to produce both clockwise and anticlockwise vortices by altering the “Attract” checkbox.

A combination image then might be created as in Fig 5 where an initial expansion from the centre as in Fig 1 is followed by the application of the pressure system

Fig 5

In conclusion, then, it seems the pressure system is useful for making fairly coherent vortices. When the speed of the particles is altered by the pressure system you get sudden eccentric changes in velocity and direction which can seem quite naturalistic. Also if there are several agents together we find that the proximity of other agents can lead to “handshake” effects, the particles of the two agents repulse each other, and you get a flare out along the axis of meeting.

The trouble with pressure system is the cost in terms of CPU time and errors in the programming, the clockwise and anti clockwise rotations are probably emergent behaviour of errors in the coding giving a tilt to right or left handed movement, it is also noticeable that the edge effect is incorrect in some features as the particles tend to fall towards the right hand edge.

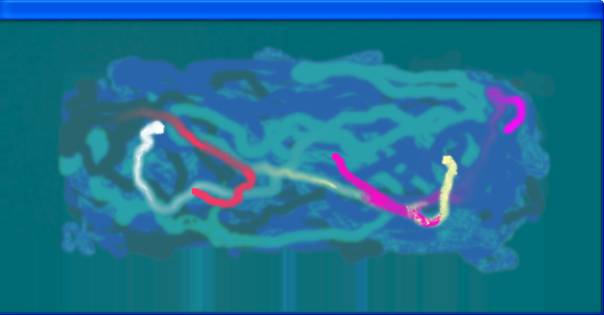

3.4 Some worm effects

For these it is a matter of providing the agent with a tail, the agent footprint makes a dent in the surface, the particles live in this dent until the surface rebounds.

Fig 6

This also shows the cumulative affect of agent trails producing a layered spaghetti effect. One of the better effects is to set the colour proportional to altitude checkbox on which makes them appear as burns through paper. Also some colour mixing will occur where two or more trail intersect, as the particles emitted from separate agents live in each others trails.

4. Conclusions and further work

The difficulty of assessing whether unpredictable more naturalistic effects should take precedence of the more formal worked out effects is a question that only individual artists can take in particular circumstances. In this work we have tried to create a framework where multiple modes of expression are possible in a live and interactive fashion.

I use Turner as an example, for several reasons, firstly, he is a painter trying to produce illusions on a two dimensional surface, secondly the illusions he creates are largely constructed with smoke, light, water and the movement of these and thirdly he is very innovative in the use of paint to create these effects. As such this seems a fine point to start when we want to consider the methods by which we should use the illusions and the sense of reality as an underpinning to our intention to produce works of art. The audience must be convinced in some way that something “real” or important is taking place or they must be drawn into a conversation where their interactions are given another dimension.

My other reason for affection for Turner, is that in his later days, (it is now thought his eye sight was failing) he pushes the medium of oil painting off the limits of comprehensibility. This means that the image is so deeply buried within the layers of paint that the illusion itself is a will in the wisp, we find ourselves pondering the significance of the merest paintbrush of the dust settling on the surface of the paint, but even so, we are aware that someone, the artist, has been there before us. Here we have less ambitious aims, but we are dealing in time based illusions, we are looking at the tools for the smooth blending of colours that respond continuously to the real world, we are trying to put the naturalistic into the art in several ways, using the pressure map which could be I expect vastly improved if we used methods described in [2], also by using live imagery from a camera and lastly putting a level of “knowledge” i.e. the surface which is responding in real time to itself in a fairly naturalistic way. The camera and this surface could be better integrated, but this should really wait until all three can be combined in a more coherent and complete fashion.

I say all this with some caveats, as I think in a personal way that the noise in the system is the art. That the totally rational tool gives little margin for the artist to do totally unexpected things, and that we would be left with a device for manipulating live images, a sort of sophisticated circus mirror show. As it stands the slightly indirect connection between the camera input through the agent movement to the mass of particle movement helps it avoid this, but at the same time this could make it incomprehensible to someone seeing the work for the first time.

As it is the system will be tested with both knowledgeable people and those who haven’t seen it before to try and push the possibilities forward. This is happening as a part of a project called “Crossings” run by [3], it is hoped that input from this project will push this work in fruitful directions.

On another note there could be a few “technical” improvements made, these are:

The altitude of the surface could be held within a separate texture to provide a greater range of values than the 256 we are currently using.

It would be a good idea to spread the range of the filter and also to be able change the shape of the filter profile, this has some impacts on performance however, so we would have to be careful. An interesting shape might be the “cowboy hat”, with the dent in the top, local repulsion with global attraction. Again this would require significant programming of the GPU.

At present the whole surface can be tilted using the mouse which sets up this streaming effect over the whole surface, it can also be rotated so one can create symmetrical effects both in the x or the y axis. If the whole surface is formed of a number of polygons this effect could be repeated in different directions at different places in the image.

References

[1] (2005) Exploring the effects of introducing real-time simulation on

collaborative urban design in augmented reality. Proc. International

workshop on Studying Designers'05. pp 369-374, 17-18 October, Aix-en-Provence, France.

Attfield, S., Mottram, C., Fatah gen. Schiek, A., Blandford, A., & Penn, A.

[2] Fast Fluid Dynamics Simulation on the GPU

Chapter 38 in GPU GEMS: Programming Techniques, Tips and Tricks for Real Time Graphics. By Mark J. Harris, University of North Carolina at Chapel Hill

[3] Mette Ramsgard Thomsen, MAA, PhD CITA / Centre for Interactive Technologies and Architecture, Royal Academy of Fine Arts, School of Architecture,Philip de Langes Allé / 1435 Copenhagen K / Denmark