Musical Image Generation with Max/MSP/Jitter

Dr. David

Kim-Boyle, PhD.

Department of Music, University of Maryland, Baltimore County, Baltimore, U.S.A.

e-mail:

kimboyle@umbc.edu

Abstract

The author

describes the real-time image generation process used in a recent work for

piano, resonant glasses and Max/MSP/Jitter. In this work, which was premiered

at the 2004 International Computer Music Conference in Miami, images are

generated in an unusual way that involves visually capturing and processing the

wave-like motions generated in a liquid by the work’s sonic materials. These

visual transformations take place in the Jitter environment with a series of

techniques analogous to many of the MSP audio processing techniques employed in

the piece. The author will outline some of these processes in detail as well as

describe some of the aesthetic considerations involving the integration of

sonic and visual materials in the work.

1.Introduction

Shimmer, premiered at the 2004

International Computer Music Conference in Miami, is a recent work for piano,

resonant glasses and Max/MSP/Jitter. During a performance, the sounds generated

by the computer and piano are sent to a separate hidden loudspeaker the cone of

which contains a small quantity of milk - the speaker cone itself is protected

by a thin plastic sheath. The shimmering of the milk is digitally captured in

real-time and processed in Jitter in ways that are analogous to many of the

audio processing techniques utilized in the piece before being sent to a pair

of video monitors on stage.

2. Audio Processing

Two 18oz glasses are placed inside the piano with a

small cardioid microphone suspended inside each. The sounds captured from the

microphones are processed with Cycling ‘74’s Max/MSP and also amplified and

sent to an onstage stereo pair of loudspeakers. [1] The glasses act as acoustic

filters and also reinforce certain resonant tones of the piano. This requires

the pianist to be particularly sensitive to these tones and to adjust their

touch accordingly.

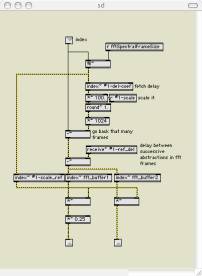

The audio processing performed in the Max/MSP

environment uses a series of spectral delay abstractions based on a technique

developed by the author [2] as well as TC Electronics MegaReverb VST plug-in

which runs on the TC Powercore Firewire. The delay architecture employed in the

spectral delay patch is based on a model in which individual FFT bins of a

short-time Fourier transform are delayed. The size of the delays, measured in

integer multiples of the FFT length, are determined by indexing user-defined

buffers which are updated in the signal domain. The spectral delay patch is

illustrated in Figure 1.

Figure 1: Spectral delay patch

A signal is used to index a buffer which contains

delays, in integer multiples of the FFT length, for each FFT bin. The delay

value is then subtracted from the current index to determine the sample number

to read from the amplitude and phase buffers of the FFT transform. A delay

value of 3, for example, for bin #7 will mean that the magnitude and phase

components of the resynthesized bin #7 will be read from bin #7 of the third

previous FFT frame. While this is

perhaps a crude way to realize spectral delays, it is computationally

inexpensive and simple to implement. This is a particularly important

consideration given the additional burden placed on the CPU by the real-time

video processing.

Scaling functions, read from another user-defined

buffer are also used to provide amplitude control over the frequency response

of each delay abstraction. Like the delay buffers, scaling buffers are updated

in the signal domain. A comb and all

pass filter combination, with variable feedback coefficients, are also used in

the spectral delay patch to simulate a reverberant tail.

Finally, a cascading series of TC Electronics

MegaReverb VST plug-ins is built into the patch with reverberation times and

high shelf attenuation parameters adjusted during the performance. In practice,

these plug-ins bring out certain resonant frequencies in a musically similar

way to the resonant glasses effect. The reverbs, seven in all, run on the TC

Powercore which significantly frees up the CPU utilization.

3. Video Processing

The sounds from the piano, amplified through the

resonant glasses, and the computer-generated sounds are sent to an offstage

loudspeaker sheathed in thin plastic. These sounds generate wave like ripples

and shimmers through a small quantity of milk poured into the speaker cone.

Milk is used as a propagational medium rather than water simply because as a

white liquid it contains the full color spectrum and therefore has more

potential for processing.

The resonant tones emphasized by the reverberation

and resonant glass process during the piece creates visually interesting

interference patterns in the milk. The process is a delicate one, however, as

the milk can percolate and bubble unpredictably if the loudspeaker is driven

too hard. At certain loud points in the piece this is often unavoidable. An

example of both of these periods is illustrated in Figure 2.

Figure 2: Interference patterns (left), Bubbling

(right)

A small digital camcorder is suspended directly

above the milk with its zoom adjusted such that the milk just touches the wide

edges of the aperture. The signal from the camera is processed in the Jitter

environment. Jitter is a set of external graphical objects for the Max/MSP

programming environment which allows live video processing and other graphical

effects to be seamlessly integrated into the MSP audio environment. The

processed signal from Jitter is then sent to a video mixer before being sent to

a pair of video monitors on stage.

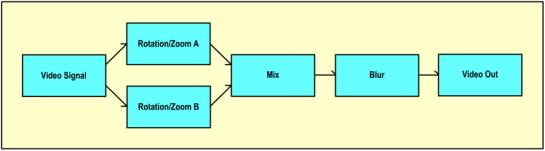

The Jitter processing is relatively straightforward although as it occurs concurrently with the audio processing the total CPU usage can be high at times. This can adversely affect the frame rate of the displayed images. While this problem could be avoided by using two separate CPUs, practical performance considerations are then raised. The image captured by the camcorder is processed in parallel by two identical chains of objects. The results from each chain are mixed with varying crossfade values and then blurred with a Quicktime effect in a method similar to that utilized with the reverberation plug-ins. This processing chain is outlined in Figure 3.

Figure 3: Video processing path

The rotational and zooming process is a simple one.

During the performance, the video signal is magnified at various degrees of

magnification, rotated and offset. Much of the imagery is focused on the areas

where the edges of the milk meet the loudspeaker. The colors are also gradually

scaled over the course of the piece. Finally, a jpeg codec is used on the video

out signal to help conserve CPU usage. Examples of the image results are

illustrated in Figure 4.

Figure 4: Video excerpts from the Jitter Patch

4. Aesthetic Considerations

The successful artistic integration of sonic and

visual mediums involves consideration of many aesthetic issues. Key amongst these are questions of unity. Do

the sonic and visual techniques explore the same artistic concerns and to what

extent are the processes employed in one medium translatable to the other?

In Shimmer

the sonic and visual processes are unified at a very primitive level in that

they are concerned with the transformation of representations. In the visual

component of the piece, this simply translates to the processing of the

shimmering milk. Musically, it refers to the processing of pre-existing works.

In Shimmer the materials performed by

the pianist, have been drawn from sonorities in Morton Feldman’s 1987 piano

work Triadic Memories. Both sonically

and visually, the materials themselves become less significant than the

resonances one is able to draw from them. The process of their transformation

itself becomes, in essence, the focus of the work. The sonic and visual

materials are also unified in that the source materials for the visual

processing are fundamentally dependant on the sonic materials.

It is often the case that the transformational

processes employed in the sonic domain have visual analogies, at least at a

broad metaphorical/conceptual level. Spectral processing is akin to color

transformation, reverberation finds its counterpart in visual blurring, and

visual magnification is like filtering for resonant tones. Unfortunately, it is

also often the case that an interesting transformational technique in one

medium is not altogether satisfying when applied to the other medium although

this is more a result of the mapping techniques applied. For example, the

visual realization of self-similar or chaotic algorithms can be strikingly

beautiful but when the algorithms are mapped to musical parameters such as

pitch the results can be musically crude. The perceptive processes involved in

both the sonic and visual mediums are quite different and it is not always the

case that musically interesting processes have visual counterparts and vice

versa.

Another aesthetic consideration is that when similar conceptual techniques are applied simultaneously in the two mediums, their intrinsic uniqueness can be weakened. Ultimately, the question becomes one of order. Higher order processes are more likely to successfully translate across mediums than lower level processes. This approach was taken in Shimmer where translatable techniques have included those listed earlier – blurring/reverberation, spectral processing/color transformation, magnification/filtering. While many of these techniques are employed simultaneously, at a micro level their evolution is more independent. Aesthetically this creates unity at higher levels while granting the material freedom to develop and explore its own unique potentials at lower levels of order.

5. Future Work

The generation of interesting visual effects from

sonic processes continues to be of artistic interest and the author is

exploring them in ongoing work. Of particular interest are relationships

between spectral processing and color transformations and also between

reverberation and blurring. In the latter case the author is developing a

convolution blurring technique analogous to those employed in convolution

reverberation algorithms. This technique will be applied in a future work for

vibraphone and Max/MSP/Jitter.

6. References

[1]

D. Zicarelli, “An Extensible Real-Time Signal Processing Environment for MAX,”

in Proceedings of the 1998 International

Computer Music Conference, Ann Arbor, MI: International Computer Music

Association, pp. 463-466, 1998.

[2]

D. Kim-Boyle, “Spectral Delays with Frequency Domain Processing,” in Proceedings of the 7th International

Conference on Digital Audio Effects (DAFX-04), Naples, pp. 42-44, 2004.